Research Recap: How much Latin does ChatGPT "know"?

Why is ChatGPT—OpenAI’s chatbot-style large language model (LLM) and focus of recent artificial-intelligence buzz—good at so many Latin tasks? Wait, is it good at Latin?

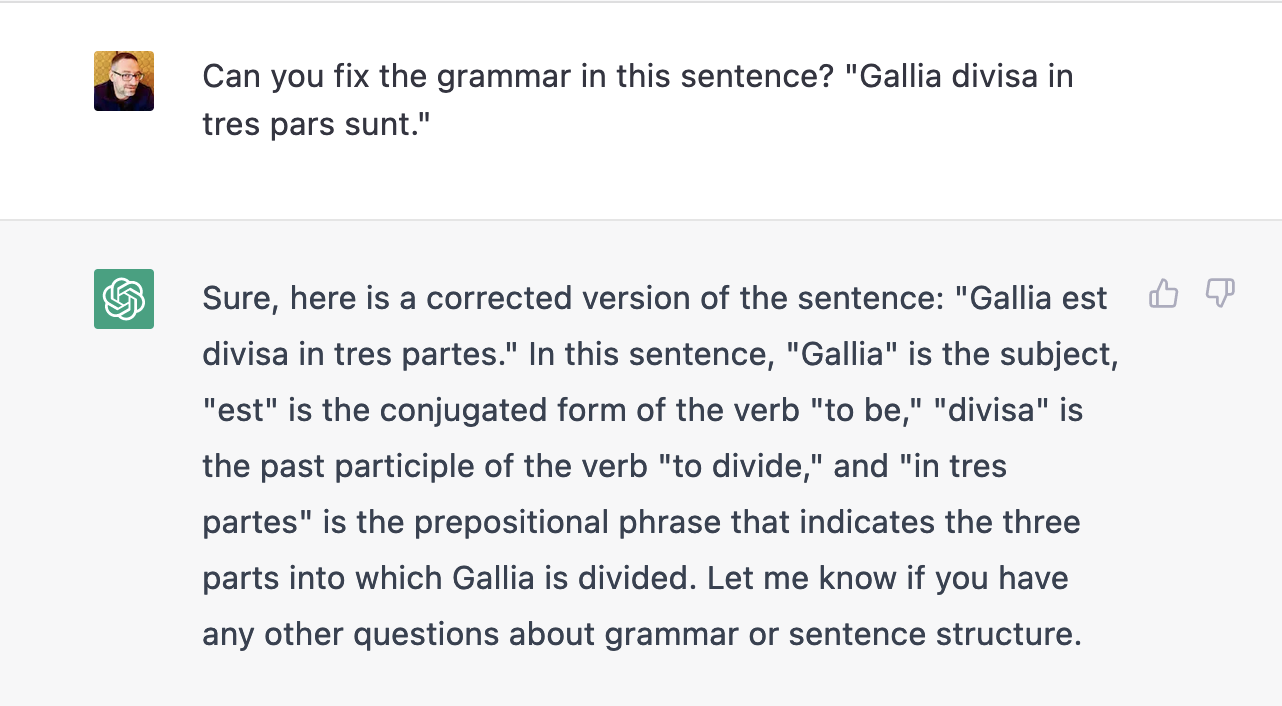

For those of you who have yet to kick its rotas (i.e. wheels), the answer is decidedly, yes! It can correct errors in sentences. It will write short Latin stories and then produce reading comprehension sentences (in Latin!) based on that story. It will lemmatize sentences and provide part-of-speech tags. It will try its hand at the Latin Prose Comp challenge of writing in style of Cicero—and then rewrite in the style of Tacitus or even Virgil. And for all of these tasks it has a charming, if pedantic, habit of showing its work. Not only can I ask for the correction of a grammatically incorrect sentence, but I also get some supporting sentences explaining the “decisions” made.

Figure 1. An example of ChatGPT correcting the grammar of a simple Latin sentence.

Are the results perfect? Hardly. But often they are good or at least good enough. It would take more than a blog post to dive deeply into the mechanics of LLMs, but there is a quick way into a discussion of their effectiveness. ChatGPT is good at Latin because ChatGPT has seen a lot of Latin. In a recent talk at the Annual Meeting of the Classical Association of New England this March, I asked “How much Latin does ChatGPT ‘know’?” and in today’s post I tackle this quantitative question head on. Before we can get into any discussion of the “unreasonable effectiveness” of a general-purpose LLM on any number of Latin activities, we need to understand what is being modeled. So our question—how many Latin words are in the ChatGPT training data?

Before getting underway, I should stress that this exercise in Latin word-counting is a thought experiment, like counting the windows in Manhattan, designed to provoke thinking about what it means to work with millions and millions of Latin words. But as there as just too many unknowns about the ChatGPT training data, it is important to recognize that what follows is more informed guesswork than exact measurement.

We do know roughly how many tokens—that is, words and wordlike elements such as numbers and punctuation—in any language are in training data for GPT-3, that is the LLM behind ChatGPT: 499 billion. But how many of these 499B tokens could possibly be Latin? And not just the occasional quid pro quo or carpe diem, but the kind of continuous Latin prose necessary to model the language sufficiently for next-word prediction at scale. Unfortunately, ChatGPT offers no help: “As a language model, I don’t have a direct way to count the number of words in my training data that belong to a specific language such as Latin.” (That would have been too easy!) So we need to estimate, which in turn becomes an interesting of its own—since the “technology has basically devoured the entire Internet,” how much Latin exists in different pockets of the internet?

The GPT-3 paper lists five large text collections as training data: Common Crawl, WebText2, Books1, Books2, and Wikipedia.

|

Source |

Tokens |

~ % Latin |

~ Latin Total |

|

Common Crawl |

410B |

0.05% |

205M |

|

WebText2 |

19B |

0.04% |

7.6M |

|

Books1 |

12B |

0% |

0 |

|

Books2 |

55B |

0.23% |

126.5M |

|

Wikipedia |

3B |

0% |

0 |

|

TOTAL |

499B |

339.1M |

Table 1. Training data for GPT-3 with estimates of Latin content.

We can remove Wikipedia from consideration. Even though Latin has a sizeable presence among the Wikipedias—a point I return to shortly—the paper is explicit that this refers solely to “English-language Wikipedia.” We can also remove Books1. In a description of that dataset, it is described as “11,038 books from the web, … free books written by yet unpublished authors” in genres such as romance, fantasy, and science fiction. It is perhaps overstating the case to say that the Latin content of this collection is minimal.

The other three collections show more promise as sources of significant amounts of Latin data. Let’s take them in size order.

As described in the GPT-2 paper, WebText2 is a collection of curated web content based on “outbound links from Reddit” heuristically determined to be “interesting, educational, or just funny.” The most active Latin-themed Reddit—r/latin—has almost 80,000 members and is a “top 5%” subreddit, so we can be confident that some of these outbound links lead to Latin content. If we assume that the linguistic diversity of these links corresponds roughly to the linguistic diversity of the internet as a whole, we can hazard a guess at WebText2’s Latin content. Here is one estimate of “usage statistics of Latin as content language on the web”: around 0.04%. A tiny figure compared to other languages—57.7% of sites in this survey are in English, 5.3% in Russian, 4.5% in Spanish, etc.—but honestly that makes sense. So, applying this percentage to WebText2 (19B X .0004) we get 7.6M Latin tokens.

Books2 is a mystery. As one blogger writes: “No one knows exactly what this is.” The preprint cited in the GPT-3 paper describes Books2 simply as “a collection of publicly-available Internet Books.” One theory is that it is a shadow library like Libgen. Regardless of the exact source we can be assured that there is a sizeable amount of Latin material contained within. And due to digitization patterns in recent decades, it is likely that the percentage of Latin is a bit higher than that of the general internet. We see such patterns, for example, in the amount of Latin in large scanning projects like Google Books. But the percentage of Latin in such collections is still hard to estimate. What can we use as a proxy? Another important source of language model training data that shows an overrepresentation of Latin content is Wikipedia. According to the site’s “multilingual statistics”, Vicipaedia, the Latin-language version of the site, has 137,727 articles, making it the 62rd largest Wikipedia by language. If we use this percentage of Wikipedia content as a way to determine Latin content in Books2 (55B X 0.0023), we get 126.5M Latin tokens.

We have saved the biggest for last. Common Crawl describes itself as an “open repository of web crawl data that can be accessed and analyzed by anyone.” It is not hard to understand how such a collection of multi-topic, multilingual textual data—especially one so large and covering a good temporal span (“petabytes of data collected over 12 years”)—would become useful to text-focused AI developers. This is a lot of material and, luckily for us, our job of estimating the Latin content is more straightforward since Common Crawl publishes its distribution of languages. (Complicating matters though is the quality filtering and deduplicating noted in the GPT-3 paper; the effects of these processes on Latin specifically are difficult to assess.) If we take the average percentage of Latin Common Crawl content available during GPT-3 data collection, we get 0.05%. Again, not a large number. But even a minuscule fraction of billions of tokens yields a sizeable result, and so we estimate the Latin in the Common Crawl dataset (410B x 0.0005) at an impressive 205M tokens.

Adding everything up, we can estimate that there may be something like 339.1M Latin tokens in the GPT-3 training data. (By contrast, it has been notoriously hard to get information about the training data for the latest ChatGPT base model, GPT-4; this model was trained on more data total, so it is safe to assume that this also means more Latin data.) No matter how you slice the data, this is a lot of Latin.

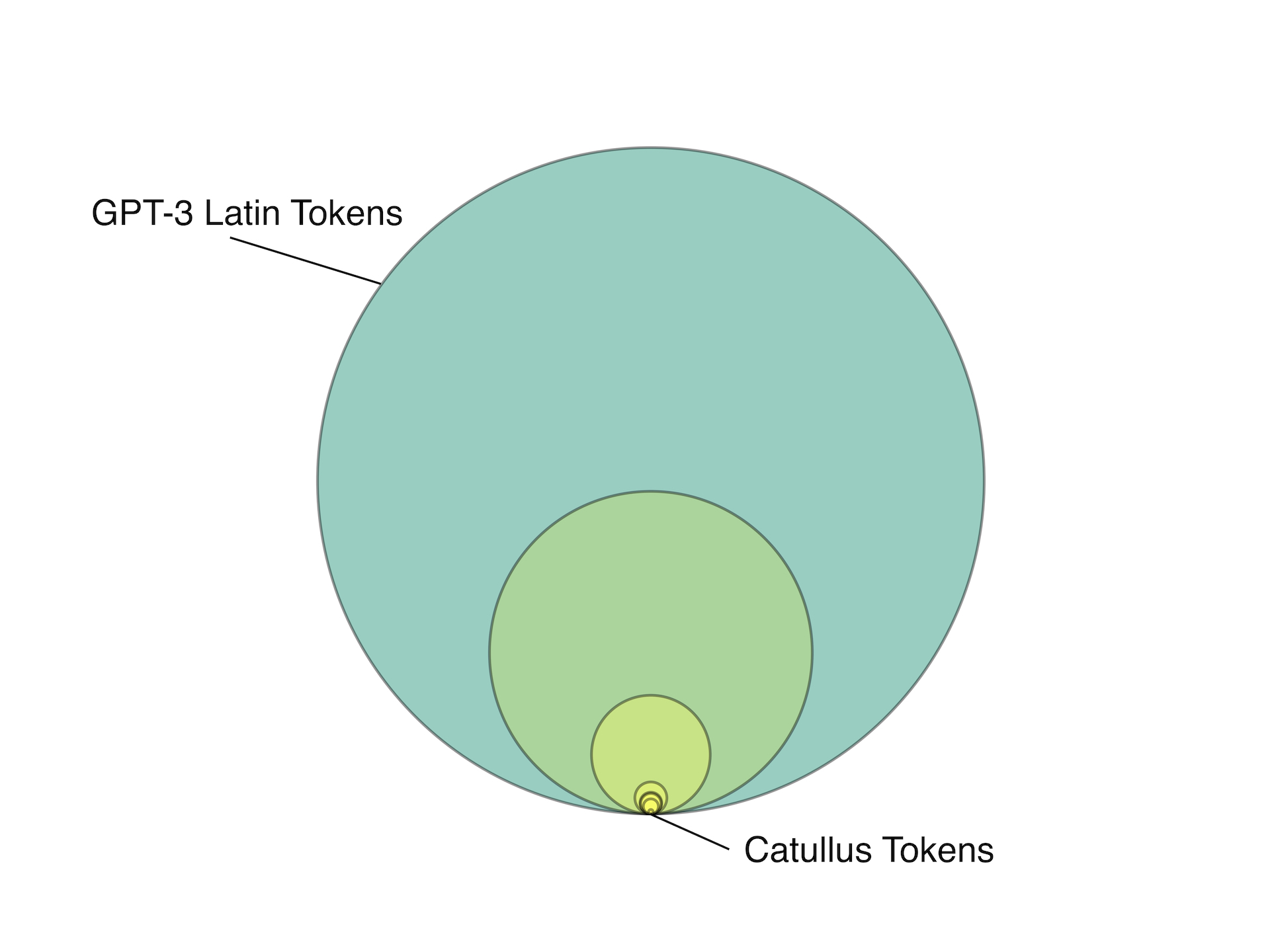

To put “a lot” into perspective, some comparative word counts:

-

Catullus’ poems: ~15,000 words

-

Virgil’s Aeneid: ~64,000 words

-

Pliny’s Historia naturalis: ~473,000 words

-

Perseus Digital Library: ~6.5M words

-

Index Thomisticus, i.e. the digitized works of Thomas Aquinas: ~14.1M words

-

Vicipaedia: ~15.8M words

-

Patrologia Latina: ~29.3M words

This is by any measure a substantial amount of reading, and almost certainly more than a lifetime of Latin reading. Yet at 66.3M words of Latin, it’s still only around a fifth of the GPT-3 estimate.

Figure 2: A nested proportional area chart based on the list of Latin works and their word counts; the smallest, yellowest circle (dot, really) represents Catullus’s 15,000 words, while the largest, greenest circle represents the 339.1M tokens that may be in the ChatGPT training data.

When we start talking about millions of Latin words, we already have some sense of what is possible. I trained a Latin model for the NLP platform spaCy on a little less than a million words: this model can predict lemmas with around 94% accuracy and part-of-speech tags with around 97% accuracy. Good performance, and on a small fraction of the data available to GPT-3. An even better comparison is with Latin BERT, a model I worked on with David Bamman. This model is trained on around 650M tokens and demonstrates excellent performance on a variety of tasks including correctly distinguishing the preposition cum from the homographic conjunction and completing fill-in-the-blank-type exercises.

We have then the provisional answer we are looking for. ChatGPT performs well on a number of Latin-language texts because it has been exposed to more Latin than any of us are ever likely to read or even encounter in any meaningful way in our lifetime. Models like GPT-3 are specifically designed to predict upcoming words in a given sequence. This kind of next-word—really, next sentence and more—prediction is a great example of a more-data problem: that is, the more text a model sees during training, the higher the number of likely collocations it can make predictions from. This is true of computational models and it is true in its own way of our readerly model of the language. After reading a good amount of Cicero, say, we too develop the intuition that if we encounter quam ob in a sentence the next word is likely to be rem. Or when we see non solum early in a sentence, the probability of seeing sed etiam soon enough is high. We proceed through our Latin texts with an ever-expanding model of what is and is not likely to happen next. But the truth is that, practically speaking, this inductive approach to modeling the Latin language is limited by the total amount of text to which we are exposed and, again, practically speaking, none of us is exposed to that much text. Not so with GPT-3, which models the language at scale and does so with a synoptic grasp of Latin co-occurrence patterns that it can draw on at a nanoseconds’ notice.

In his book Latin: Story of a World Language, Jürgen Leonhardt draws up his own thought experiment about how much Latin has ever been written: “If we assume that the sum total of Latin texts from antiquity may be snugly accommodated in five hundred volumes running an estimate five hundred pages each, we would need about ten thousand times that number, that is, at least five million additional volumes of the same size, to house the total output in Latin texts.” GPT-3—and the expanding array of other LLMs—is perhaps the closest Latinists have ever come to having Leonhardt’s five-million-volume library at hand, ready to be used. The novelty of this relationship with the language cannot be underestimated and we are just starting to understand what this expansive, on-demand model of the Latin language can do.

Accordingly, it is my hope that, by asking questions about why the model works the way it does, we are less likely to view its output as a kind of Latin-language magic trick and more as a function of how LLMs are constructed and how they make use of the data they are exposed to during training. The number of words in that data is only one question we can ask to better understand what happens between the moment when we submit our prompt to ChatGPT and the moment it responds. In order to better equip ourselves to ask other no less consequential questions, Latinists might consider reading up on natural language processing, a philologically relevant area of computer science that is likely to yield methodologically significant progress in the field in the coming years. For the ChatGPT-curious Latinist, I can recommend taking up Jurafsky and Martin’s Speech and Language Processing or Chollet’s Deep Learning alongside Leonhardt’s Latin as we turn our attention from what the model “knows” to what the model does and can do with this AI-derived Latin “knowledge.”

I would like to thank David Bamman, David Ratzan, and Clifford Robinson as well as attendees of the CANE workshop for their feedback on earlier drafts of this post.